In scientific application using triangular grids it is often the case to load a value from all three vertices of the triangle which is spread across RAM. In order to use SIMD instruction to speed up execution time of the program the three values must be stored into a single SIMD register to perform e.g. a parallel multiplication (for a scalar product). One approach to archive this is to load the values one by one into multiple registers and combine them in an additional step. Would it be a better approach to load the three values in on step into one register? This can be done with the AVX gather instruction.

As I don't know how to use SIMD Intrinsics in fortran, this example is C++ only.

We start by native approach how to load three values into a SIMD register using intrinsics datatypes like __m256d which can hold up to four 64bit double precision floating-point values where actually only three are used.

__m256d f1(const std::vector<double>& values, const std::array<int,3>& idx) {

const __m256d vec{ values[idx[0]], values[idx[1]], values[idx[2]]};

return vec;

}

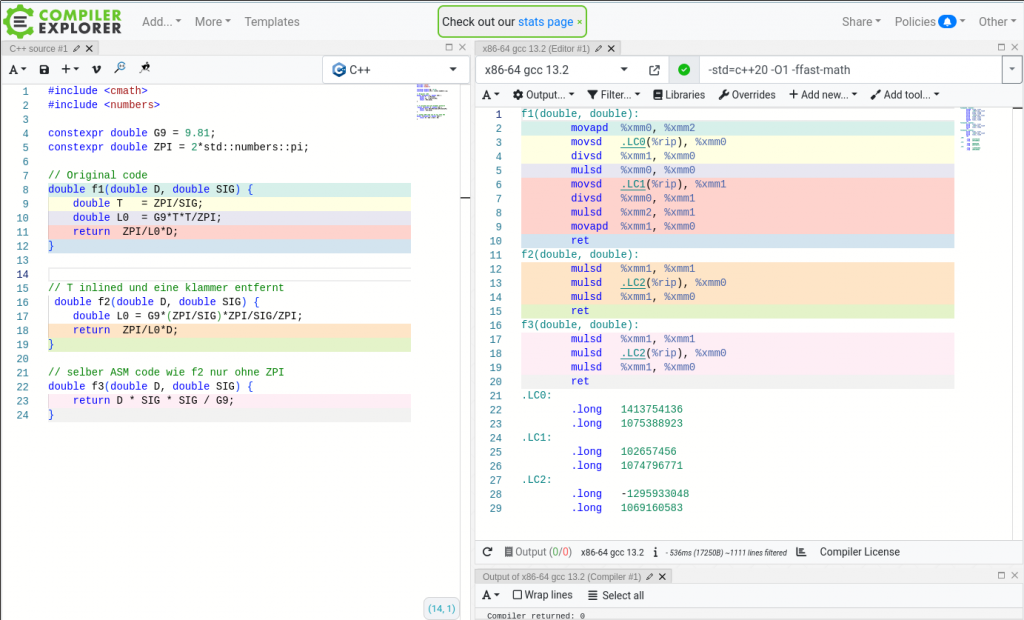

This generate the following assembler code independent of passed optimization flags -O1 -O2 -O3

movq (%rdi), %rax #

movslq (%rsi), %rcx # load idx0 in register rcx

movslq 4(%rsi), %rdx # load idx1 in register rdx

movslq 8(%rsi), %rsi # load idx2 in register rsi

vmovsd (%rax,%rcx,8), %xmm0 # load values[idx[0]] into xmm0

vmovq (%rax,%rsi,8), %xmm1 # load values[idx[2]] into xmm1

vmovhpd (%rax,%rdx,8), %xmm0, %xmm0 # load values[idx[1]] into hight part of xmm0

vinsertf128 $0x1, %xmm1, %ymm0, %ymm0 # combine xmm0 and xmm1 into 512bit register ymm0

ret

To load 3 values at once we can use the _mm256_mask_i32gather_pd instruction which take a base address and offsets in a variable of type __m128i. To only load 3 instead of 4 values there is an additional mask variable.

The C++ code looks like this now:

__m256d f2(const std::vector<double>& values, const std::array<int,3>& idx) {

// mask to load 3 instead of 4 values

const __m128i mask = _mm_setr_epi32(-1, -1, -1, 0);

// Copy Element Node IDs into SIMD register

const __m128i vindex = _mm_maskload_epi32(idx.data(), mask);

// Load 3 double values

constexpr int scale = 8;

// mask to load 3 values but this time as 256d variable (but docu say it must be 256i, bug?)

const __m256d mask2 = _mm256_setr_pd (-1, -1, -1, 0);

const __m256d vec{_mm256_mask_i32gather_pd (__m256d{}, values.data(), vindex, mask2, scale)};

return vec;

}

This generate the following assembler code independent of passed optimization flags -O1 -O2 -O3

movq (%rdi), %rax #

vmovapd .LC1(%rip), %ymm2 # load mask2 into ymm2

vxorpd %xmm0, %xmm0, %xmm0 # zero register xmm0

vmovdqa .LC0(%rip), %xmm1 # load mask1 into xmm1

vpmaskmovd (%rsi), %xmm1, %xmm1 # load idx into xmm1

vgatherdpd %ymm2, (%rax,%xmm1,8), %ymm0 # load values[idx] into ymm0

ret

With AVX512 it is even possible the gather and scatter 3 values at ones. This time the mask variable is easier to create.

void f3(std::vector<double>& values, const std::array<int,3>& idx) {

// Copy Element Node IDs into SIMD register

constexpr int mask = 0b00000111;

const __m128i vindex = _mm_maskz_loadu_epi32(mask, idx.data());

// Load 3 double values

constexpr int scale = 8;

const __m256d vec{_mm256_mmask_i32gather_pd (__m256d{}, mask, vindex, values.data(), scale)};

// Store 3 double vales

_mm256_mask_i32scatter_pd(values.data(), mask, vindex, vec, scale);

}

This generate the following assembler code independent of passed optimization flags -O1 -O2 -O3

movl $7, %eax # load mask into eax

vxorpd %xmm0, %xmm0, %xmm0 # zero register xmm0

kmovw %eax, %k1 # convert mask into the mask register k1

movq (%rdi), %rax #

vmovdqu32 (%rsi), %xmm1{%k1}{z} # load idx into xmm1

kmovw %k1, %k2 # copy mask register. why is that so? bug?

vgatherdpd (%rax,%xmm1,8), %ymm0{%k2} # load values[idx] into ymm0

vscatterdpd %ymm0, (%rax,%xmm1,8){%k1} # stpre ymm0 into values[idx]

vzeroupper # can be optimization away?

ret

To measure I created a little testcase with the presented different variants on the following CPUs: Intel Core i5-10500, Intel Xeon E5-2695 v4, Intel Core i9-7900X. Unfortunately there is no speedup using AVX. There is even no slowdown (except for 1-2%).

https://www.intel.com/content/www/us/en/docs/intrinsics-guide/index.html#text=m256_i32gather&expand=4474&ig_expand=4252,4250,2125,3720,4058,3723,3723&techs=AVX_ALL